As AI-powered chatbots such as ChatGPT become more prevalent, publishing executives are exploring the possibility of compensation for the use of their content in training such technologies.

Generative AI technology has already left people in awe with their ability to have a live conversation, write complex computer programming codes, compose sonnets and raps, etc.

“We have valuable content that’s being used constantly to generate revenue for others off the backs of investments that we make, that requires real human work, and that has to be compensated,” said Danielle Coffey, executive vice president and general counsel of the News Media Alliance.

AI chatbots such as Google’s Bard and ChatGPT are trained on large amounts of text data using deep learning neural networks, enabling them to generate responses based on patterns they have learned from the training data.

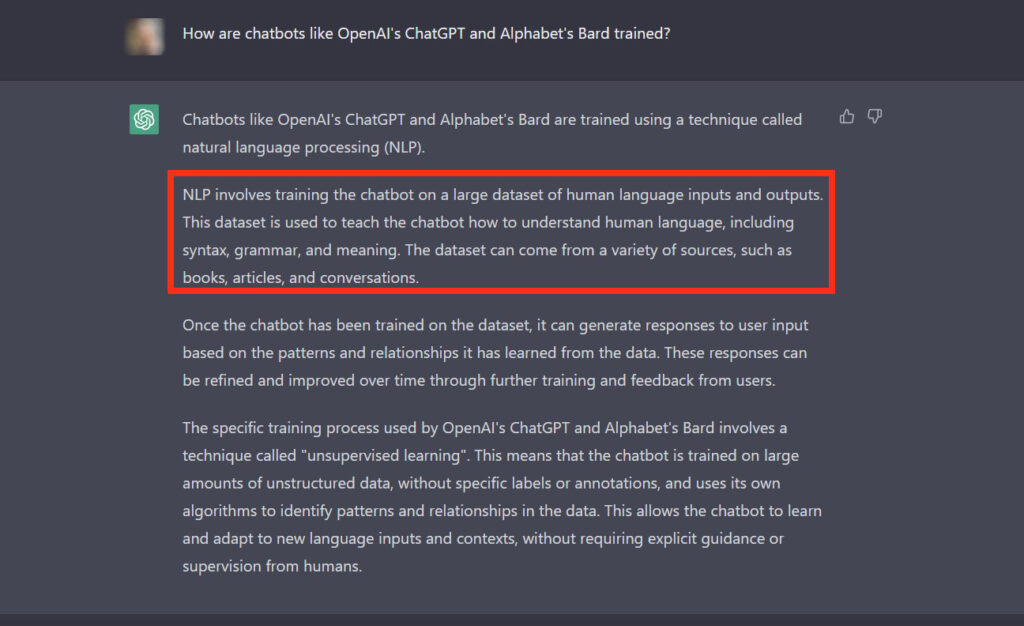

When I asked ChatGPT, “How are chatbots like OpenAI’s ChatGPT and Alphabet’s Bard trained?” it says the “natural language processing (NLP) technique is used.”

NLP involves “a large dataset of human language inputs and outputs,” which includes “a variety of sources, such as books, articles, and conversations.”

Hence, the publishing executives have begun to examine the extent to which their content has been used to “train” AI tools and are considering legal options for compensation, according to people familiar with meetings organized by the News Media Alliance.

Compensation for proprietary content

During a recent investor conference, Robert Thomson, CEO of News Corp., the parent company of The Wall Street Journal, revealed he has started talks with an undisclosed party over compensation.

“Clearly, they are using proprietary content — there should be, obviously, some compensation for that,” said Mr. Thomson.

According to sources familiar with the matter, discussions have taken place between Reddit and Microsoft regarding the use of Reddit’s content for AI training. However, a spokesperson for Reddit declined to comment on the matter.

The debate is now focused on whether the developers of AI companies have the right to scrape content off the internet and feed it into their training models. This is because a legal provision called “fair use” allows for copyright material to be used without permission in certain cases.

Sam Altman, CEO of OpenAI, once said in an interview “we’ve done a lot with fair use,” when it comes to ChatGPT.

“We’re willing to pay a lot for very high-quality data in certain domains,” Altman added, citing science as one example.

Publishers are not alone

Last month, stock photo provider Getty Images sued Stable Diffusion, an AI image generator, alleging they used Getty’s photos to train AI without consent or a license.

Getty claimed Stable Diffusion stole 12 million copyrighted images and demanded $150,000 for each image, meaning $1.8 trillion in total compensation.

“Media should be up in arms about this. AI is going to eat the internet and there’s no benefit for being its training materials,” tweeted Cameron Wilson in response to the WSJ article.

This article is originally from MetaNews.